The Secure, Reliable, and Intelligent Systems (SRI) Lab is a research

group in the Department of Computer Science at ETH

Zurich.

Our research focuses on reliable, secure, and trustworthy machine learning, with emphasis on large language

models.

We currently study issues of controllability, security and privacy, and reliable

evaluation of LLMs, their application to mathematical reasoning and coding, as well as generative AI

watermarking, AI regulations, federated learning privacy, robustness and

fairness certification, and quantum computing.

Our work has led to six ETH spin-offs:

NetFabric (AI for systems),

LogicStar (AI code agents),

LatticeFlow (robust ML),

InvariantLabs (secure AI agents; acquired),

DeepCode (AI for code; acquired),

and ChainSecurity (security verification; acquired).

To learn more about our work see our Research page, recent Publications, and GitHub

releases. To stay up to date follow our group on Twitter.

Latest News

12.04.2026

SRI Lab is presenting 8 papers at the main track of ICLR 2026 in Rio de Janeiro, Brazil. Two papers have been awarded with an oral presentation.

24.02.2026

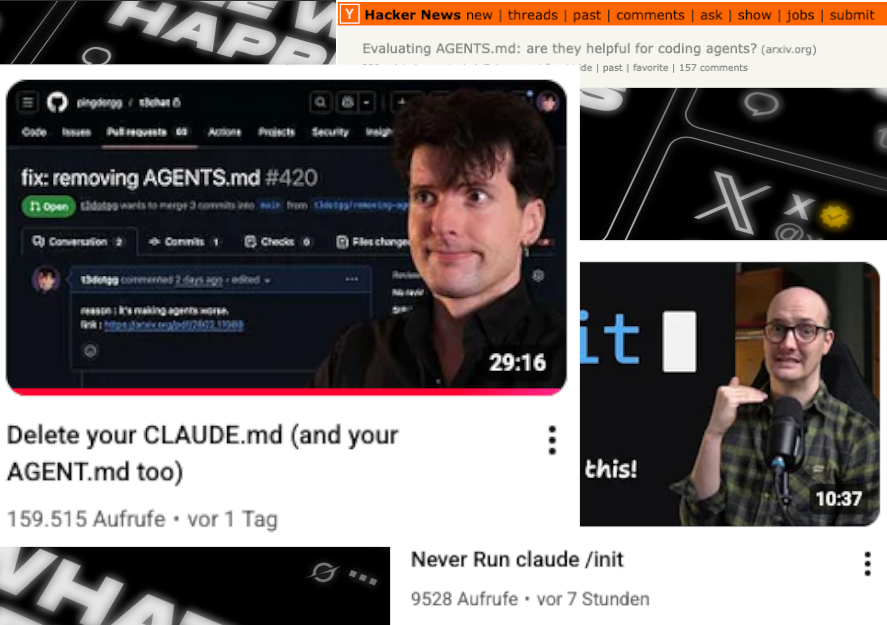

Our work on the effect of context files on coding agents trends on X (formerly Twitter) and HackerNews after being featured in several high-profile YouTube videos.

24.10.2025

Our work on sycophantic behavior in large language models was featured in a Nature article on the risks of LLM sycophancy in scientific research.

Most Recent Publications

Constrained Decoding of Diffusion LLMs with Context-Free Grammars

Niels Mündler, Jasper Dekoninck, Martin Vechev

ICLR

2026

DL4C @ NeurIPS'25 Oral

DL4C @ NeurIPS'25 Oral

DL4C @ NeurIPS'25 Oral

DL4C @ NeurIPS'25 Oral

Expressiveness of Multi-Neuron Convex Relaxations in Neural Network Certification

Yuhao Mao*, Yani Zhang*, Martin Vechev

ICLR

2026

* Equal contribution

LLM Fingerprinting via Semantically Conditioned Watermarks

Thibaud Gloaguen, Robin Staab, Nikola Jovanović and Martin Vechev

ICLR

2026

Oral

Oral

Oral

Oral