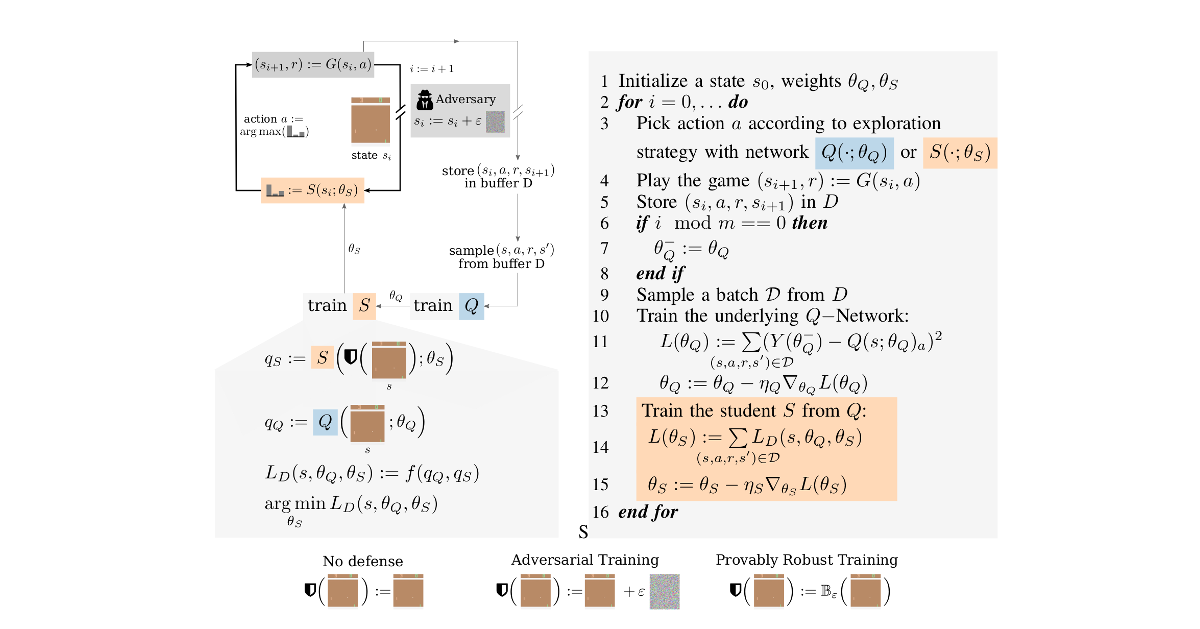

In deep reinforcement learning (RL), adversarial attacks can trick an agent into unwanted states and disrupt training. We propose a system called Robust Student-DQN (RS-DQN), which permits online robustness training alongside Q networks, while preserving competitive performance. We show that RS-DQN can be combined with (i) state-of-the-art adversarial training and (ii) provably robust training to obtain an agent that is resilient to strong attacks during training and evaluation.

@inproceedings{fischer2019saferl, title={Online Robustness Training for Deep Reinforcement Learning}, author={Marc Fischer, Matthew Mirman, Steven Stalder, Martin Vechev}, journal={arXiv preprint arXiv:1911.00887}, year={2019}}

📋 Copy