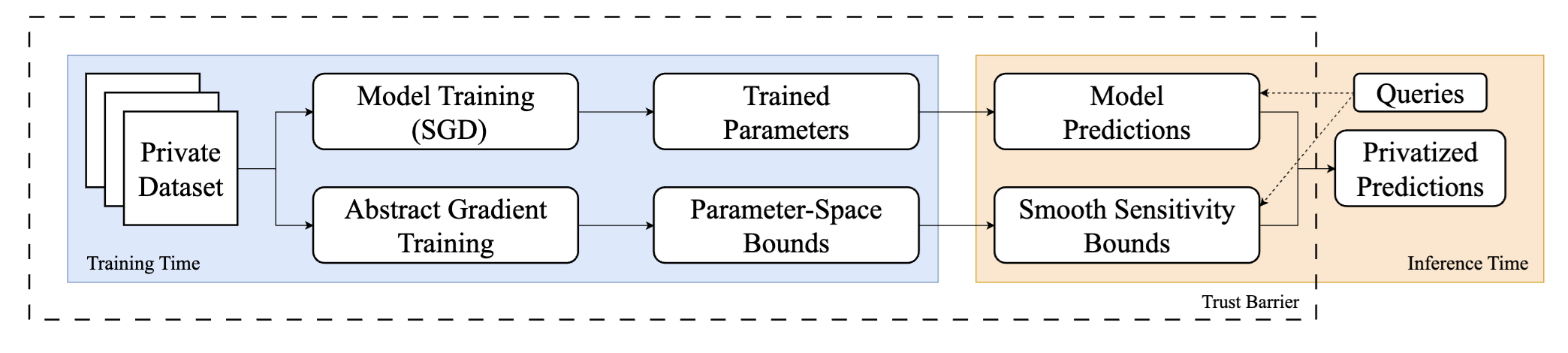

We study private prediction where differential privacy is achieved by adding noise to the outputs of a non-private model. Existing methods rely on noise proportional to the global sensitivity of the model, often resulting in sub-optimal privacy-utility trade-offs compared to private training. We introduce a novel approach for computing dataset-specific upper bounds on prediction sensitivity by leveraging convex relaxation and bound propagation techniques. By combining these bounds with the smooth sensitivity mechanism, we significantly improve the privacy analysis of private prediction compared to global sensitivity-based approaches. Experimental results across real-world datasets in medical image classification and natural language processing demonstrate that our sensitivity bounds are can be orders of magnitude tighter than global sensitivity. Our approach provides a strong basis for the development of novel privacy preserving technologies.

@inproceedings{ wicker2025certification, title={Certification for Differentially Private Prediction in Gradient-Based Training}, author={Matthew Robert Wicker and Philip Sosnin and Igor Shilov and Adrianna Janik and Mark Niklas Müller and Yves-Alexandre de Montjoye and Adrian Weller and Calvin Tsay}, booktitle={The Forty-Second International Conference on Machine Learning}, year={2025}, url={https://openreview.net/forum?id=viXwXCkA7N} }