SRI Lab at ICLR 2022

Aggregated by Nikola Jovanović, Mislav Balunović

April 25, 2022

The Tenth International Conference on Learning Representations (ICLR 2022) will be held virtually from April 25th through 29th. We are thrilled to share that SRI Lab will present five works at the conference, including one spotlight presentation! In this meta post you can find all relevant information about our work, including presentation times, as well as a dedicated blogpost for each work, presenting it in more detail. We look forward to meeting you at ICLR!

Fair Normalizing Flows

Authors:

Mislav Balunović,

Anian Ruoss,

Martin Vechev

Keywords: fair representation learning, normalizing flows

Conference Page: https://iclr.cc/virtual/2022/poster/7045

Poster - Mon Apr 25 19:30 UTC+2 (Poster Session 2)

Keywords: fair representation learning, normalizing flows

Conference Page: https://iclr.cc/virtual/2022/poster/7045

Poster - Mon Apr 25 19:30 UTC+2 (Poster Session 2)

Provably Robust Adversarial Examples

Authors:

Dimitar I. Dimitrov,

Gagandeep Singh,

Timon Gehr,

Martin Vechev

Keywords: adversarial examples, robustness

Conference Page: https://iclr.cc/virtual/2022/poster/6160

Poster - Tue Apr 26, 19:30 UTC+2 (Poster Session 5)

Keywords: adversarial examples, robustness

Conference Page: https://iclr.cc/virtual/2022/poster/6160

Poster - Tue Apr 26, 19:30 UTC+2 (Poster Session 5)

Complete Verification via Multi-Neuron Relaxation Guided Branch-and-Bound

Authors:

Claudio Ferrari,

Mark Niklas Müller,

Nikola Jovanović,

Martin Vechev

Keywords: certified robustness, adversarial examples

Conference Page: https://iclr.cc/virtual/2022/poster/6097

Poster - Wed Apr 27 11:30 UTC+2 (Poster Session 7)

Keywords: certified robustness, adversarial examples

Conference Page: https://iclr.cc/virtual/2022/poster/6097

Poster - Wed Apr 27 11:30 UTC+2 (Poster Session 7)

Bayesian Framework for Gradient Leakage

Authors:

Mislav Balunović,

Dimitar I. Dimitrov,

Robin Staab,

Martin Vechev

Keywords: federated learning, privacy, gradient leakage

Conference Page: https://iclr.cc/virtual/2022/poster/6934

Poster - Wed Apr 27 19:30 UTC+2 (Poster Session 8)

Keywords: federated learning, privacy, gradient leakage

Conference Page: https://iclr.cc/virtual/2022/poster/6934

Poster - Wed Apr 27 19:30 UTC+2 (Poster Session 8)

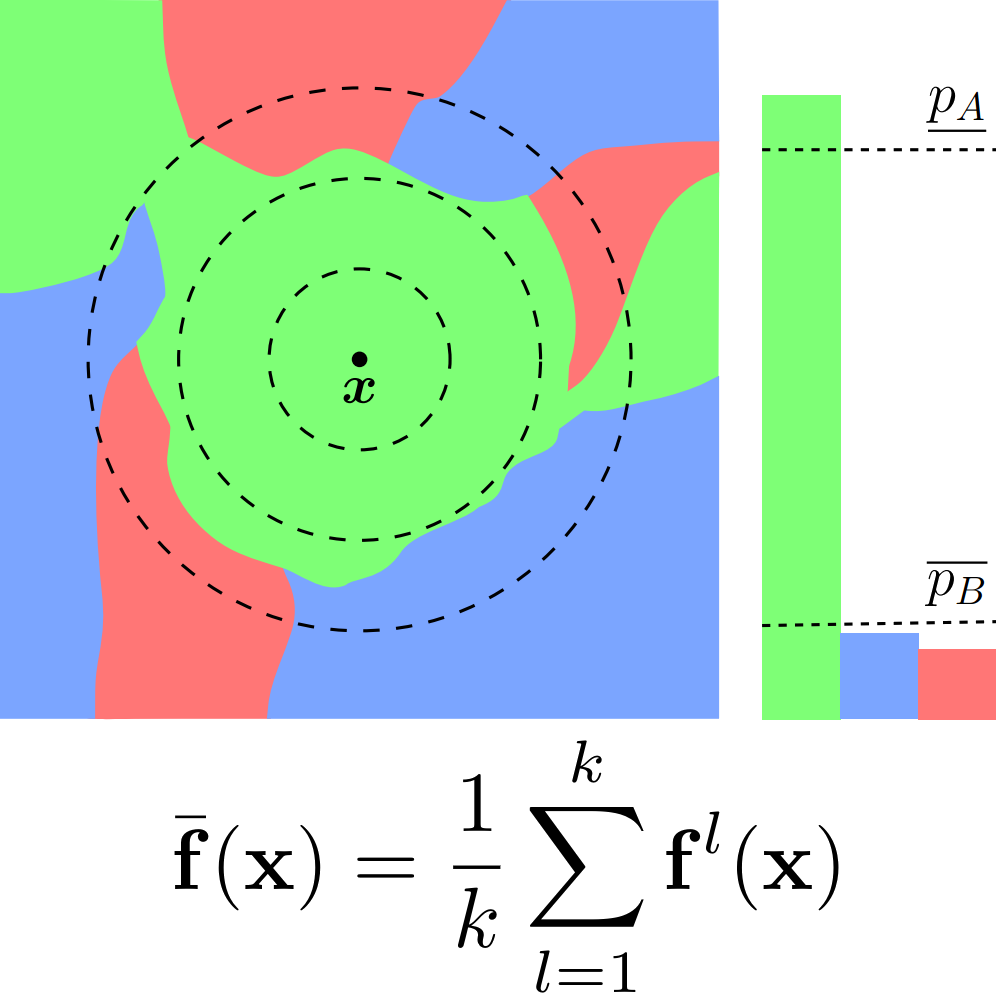

Boosting Randomized Smoothing with Variance Reduced Classifiers (Spotlight)

Authors:

Miklós Z. Horváth,

Mark Niklas Müller,

Marc Fischer,

Martin Vechev

Keywords: randomized smoothing, certified robustness, ensembles

Conference Page: https://iclr.cc/virtual/2022/spotlight/6328

Spotlight - Thu Apr 28, 19:30 UTC+2 (Poster Session 11)

Keywords: randomized smoothing, certified robustness, ensembles

Conference Page: https://iclr.cc/virtual/2022/spotlight/6328

Spotlight - Thu Apr 28, 19:30 UTC+2 (Poster Session 11)